stable-diffusionflow-modelsgenerative-art

2025-04-14

Lately, I've been thinking about how images are represented in feature space-

Where in a neural network does the vibe of an image actually reside?

stable-diffusiongenerative-art

2024-05-01

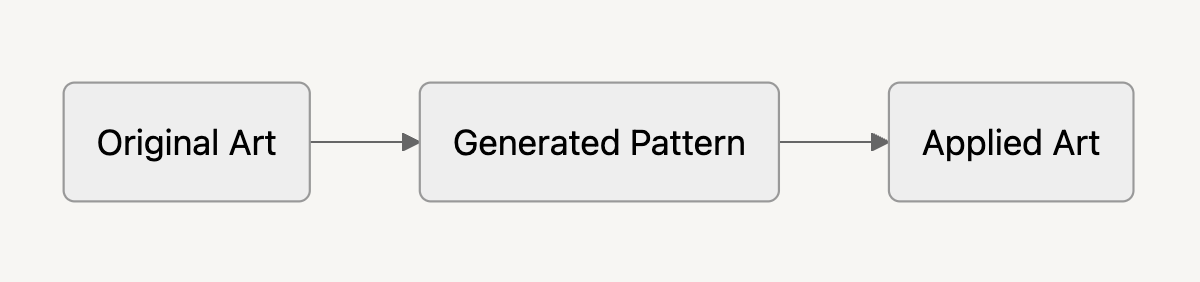

Diagram showing the process of applied generation art

Diagram showing the process of applied generation art

In this study, I explore the potential of AI-assisted design in transforming natural forms and original artwork into complex, aesthetically pleasing patterns suitable for fashion applications. The process begins with either a hand-drawn sketch or a photograph of a natural object, which serves as the seed for stable diffusion models.

Methodology:

- Source Image Creation/Selection: Original drawings or photographs of natural objects.

- Pattern Generation: Utilizing Stable Diffusion, create varied patterns based on the source material. Explore midjourney, SD3, SDXL.

- Application Visualization: Implement the generated patterns onto conceptual fashion designs, again using AI-assisted image generation.

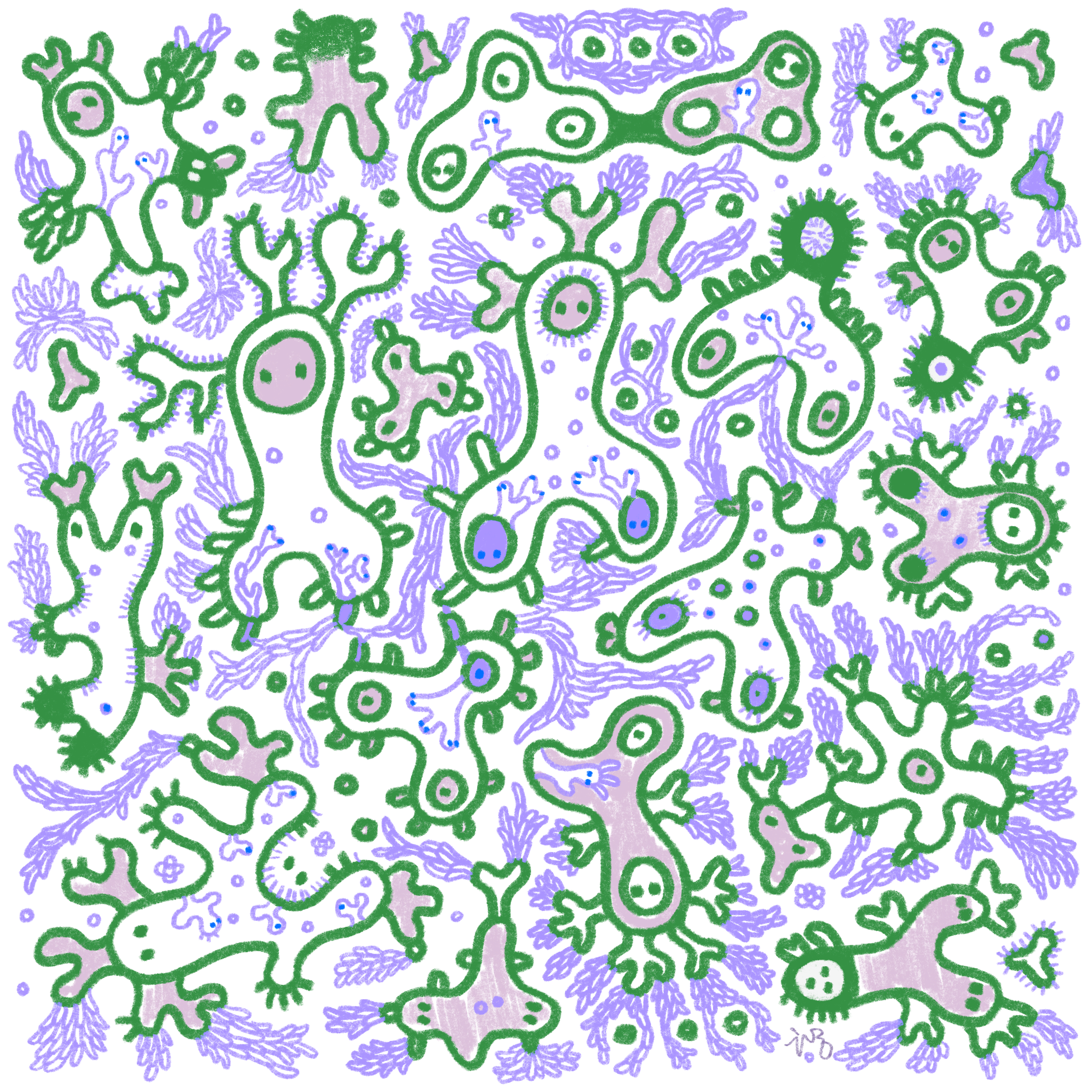

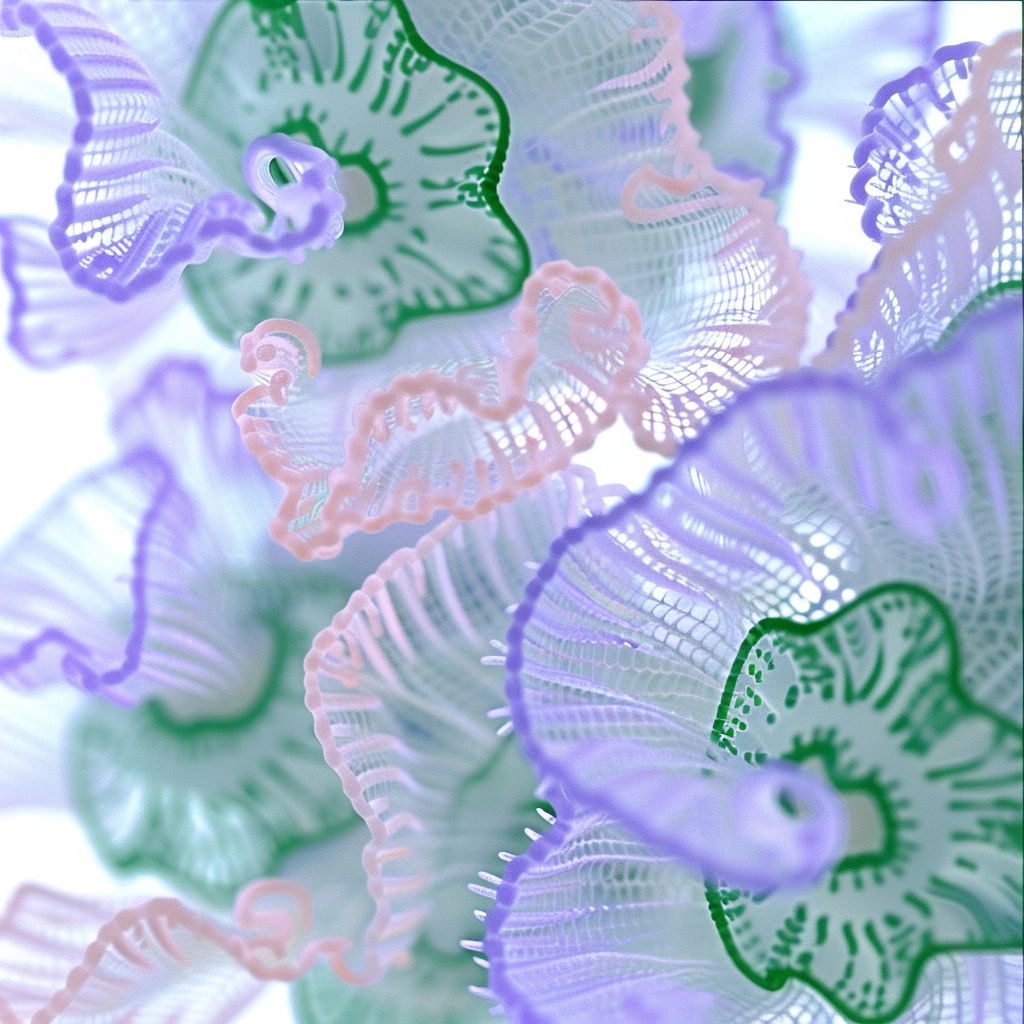

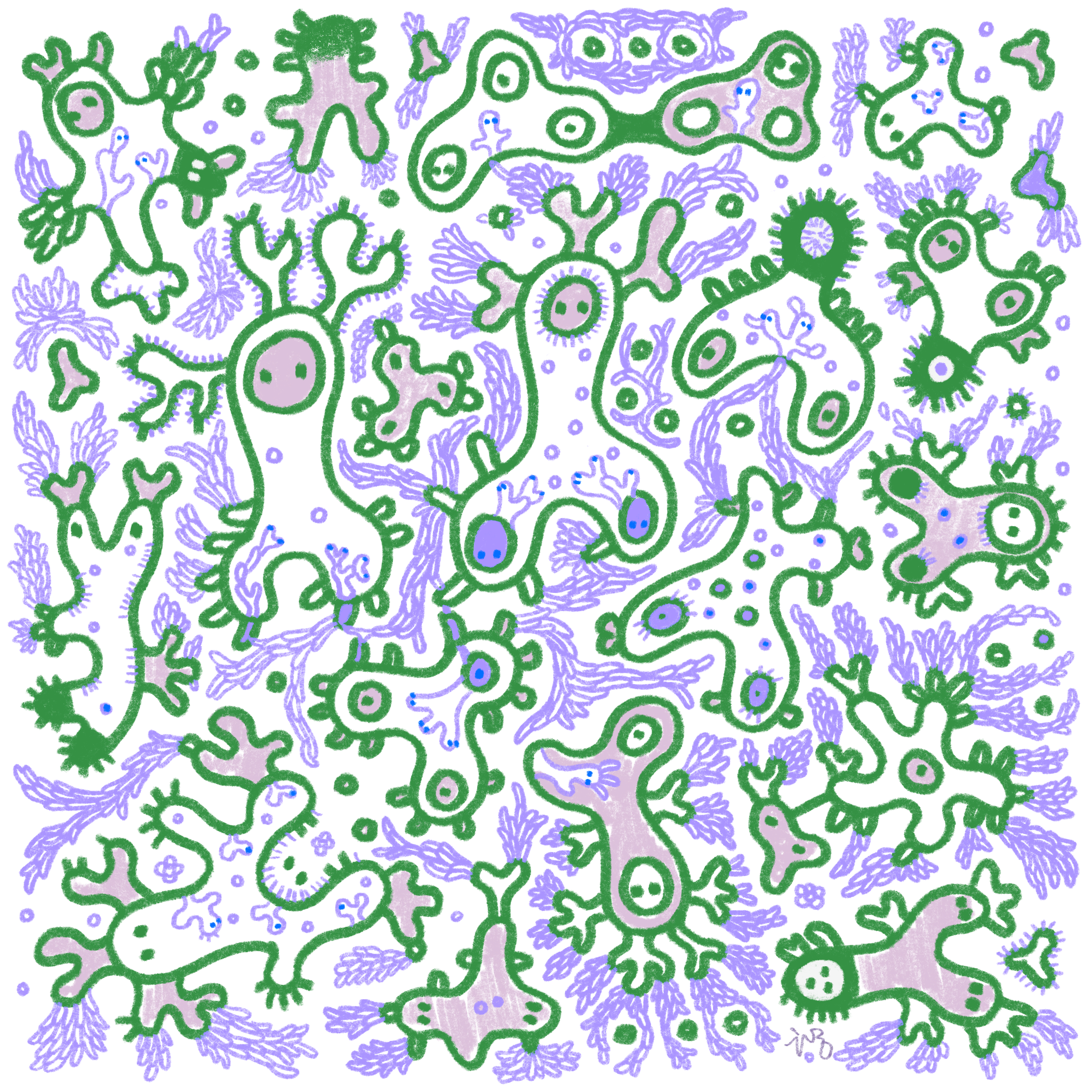

Original Drawing - Dancing in my room 2022

Original Drawing - Dancing in my room 2022

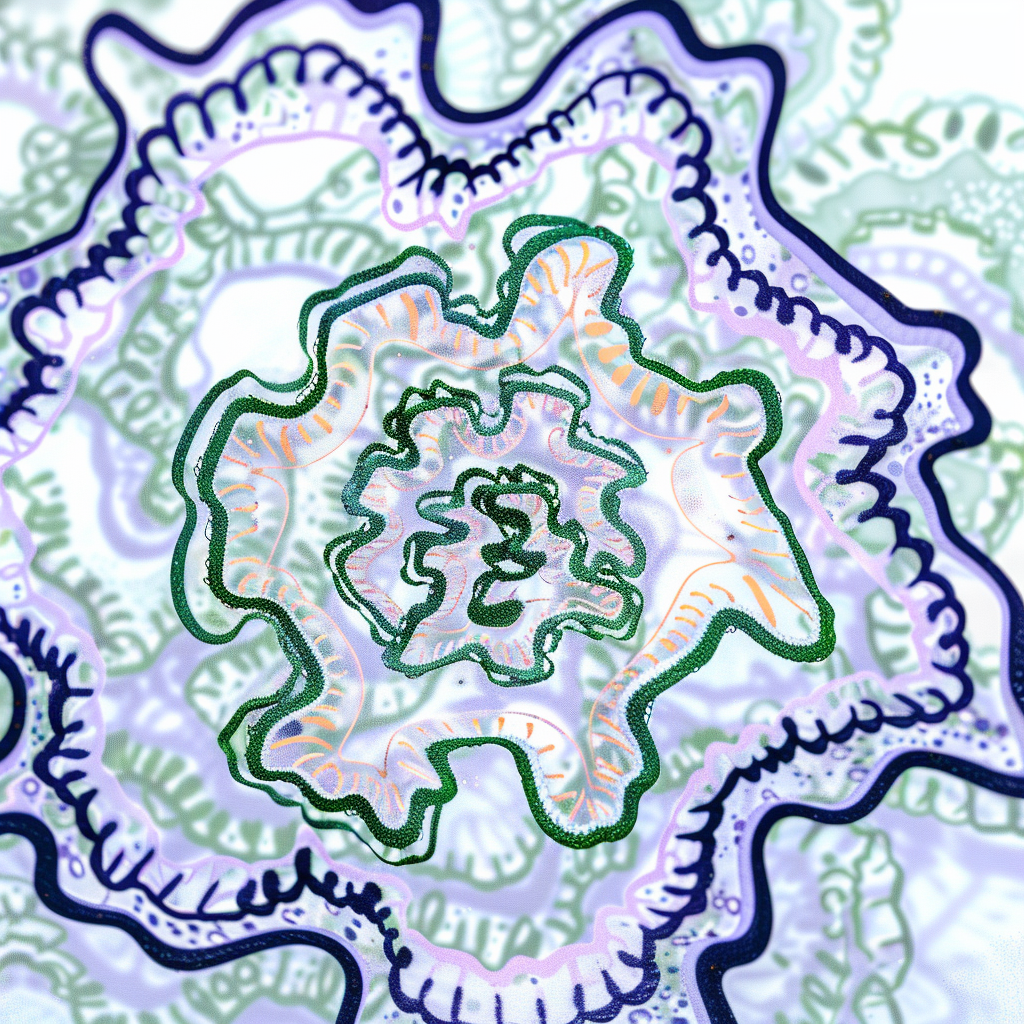

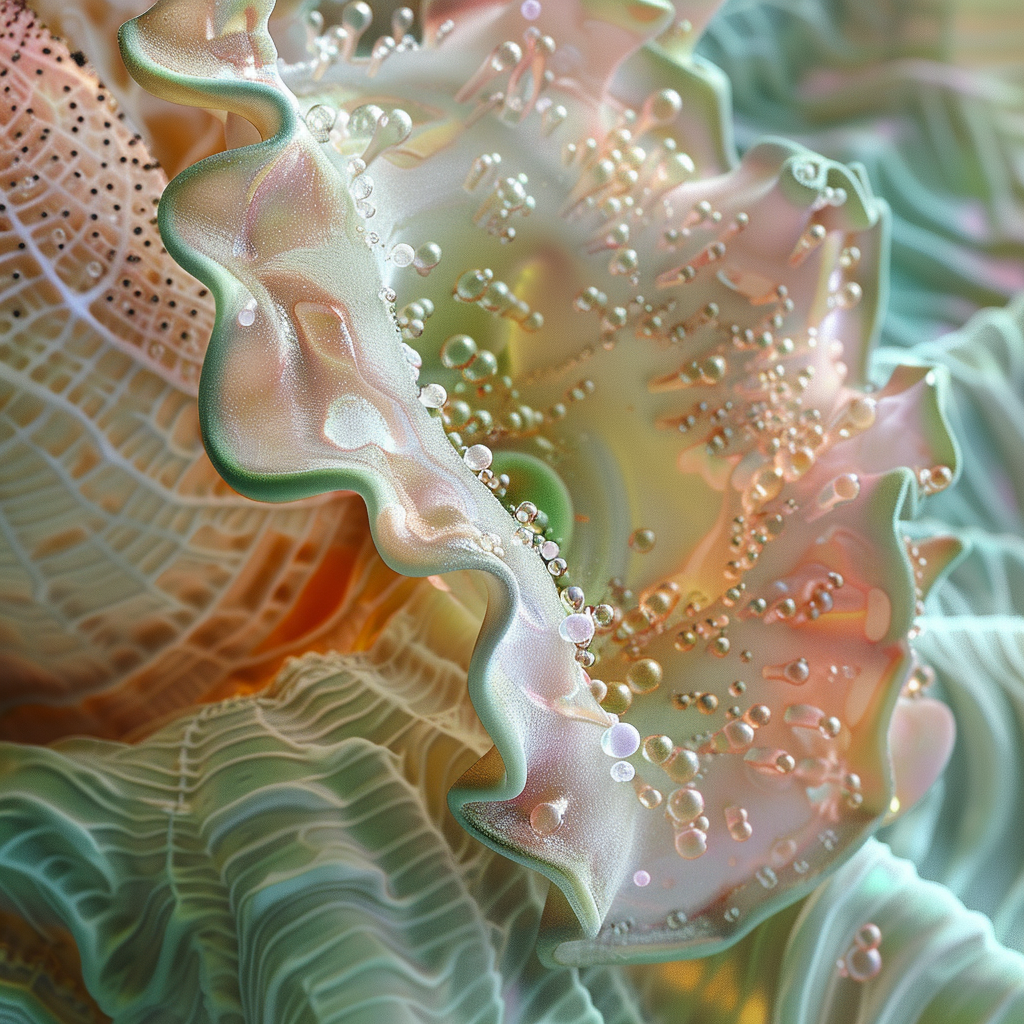

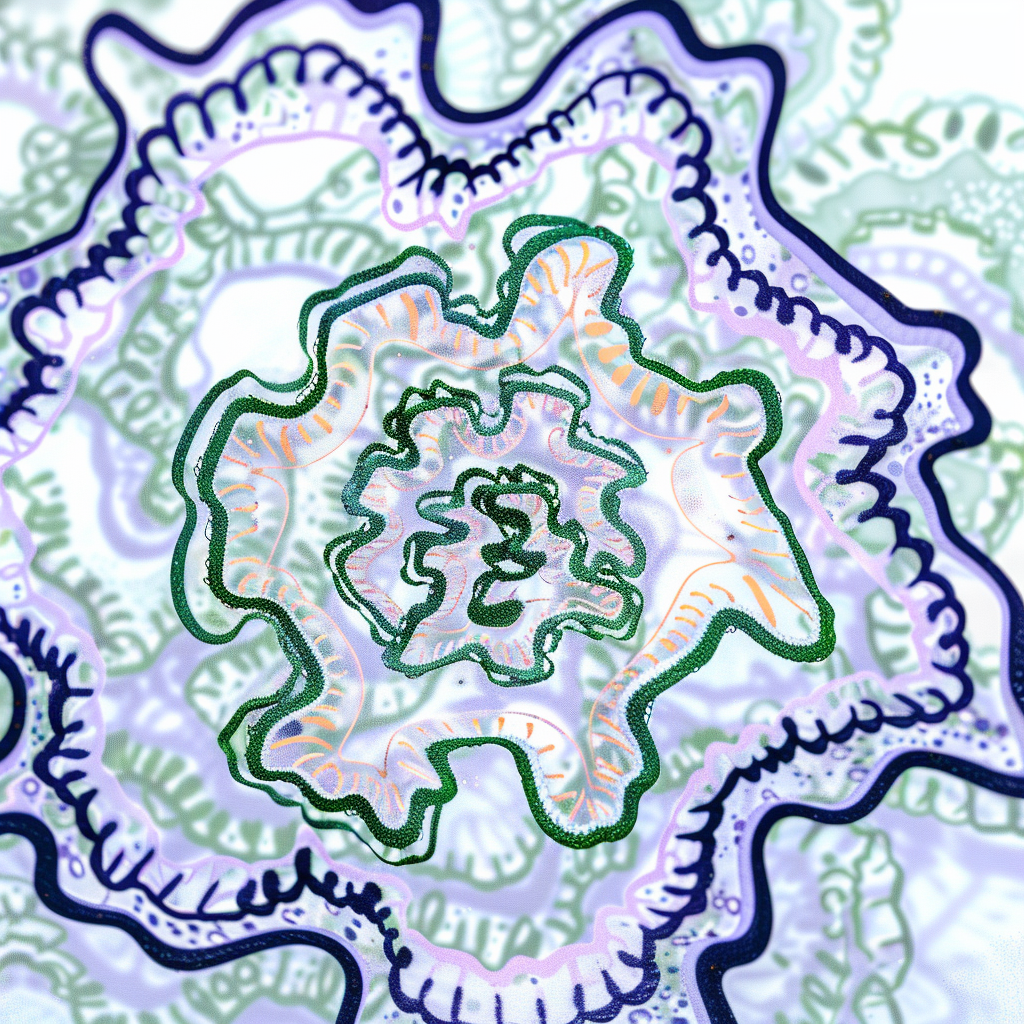

Generated pattern 1 2024

Generated pattern 1 2024

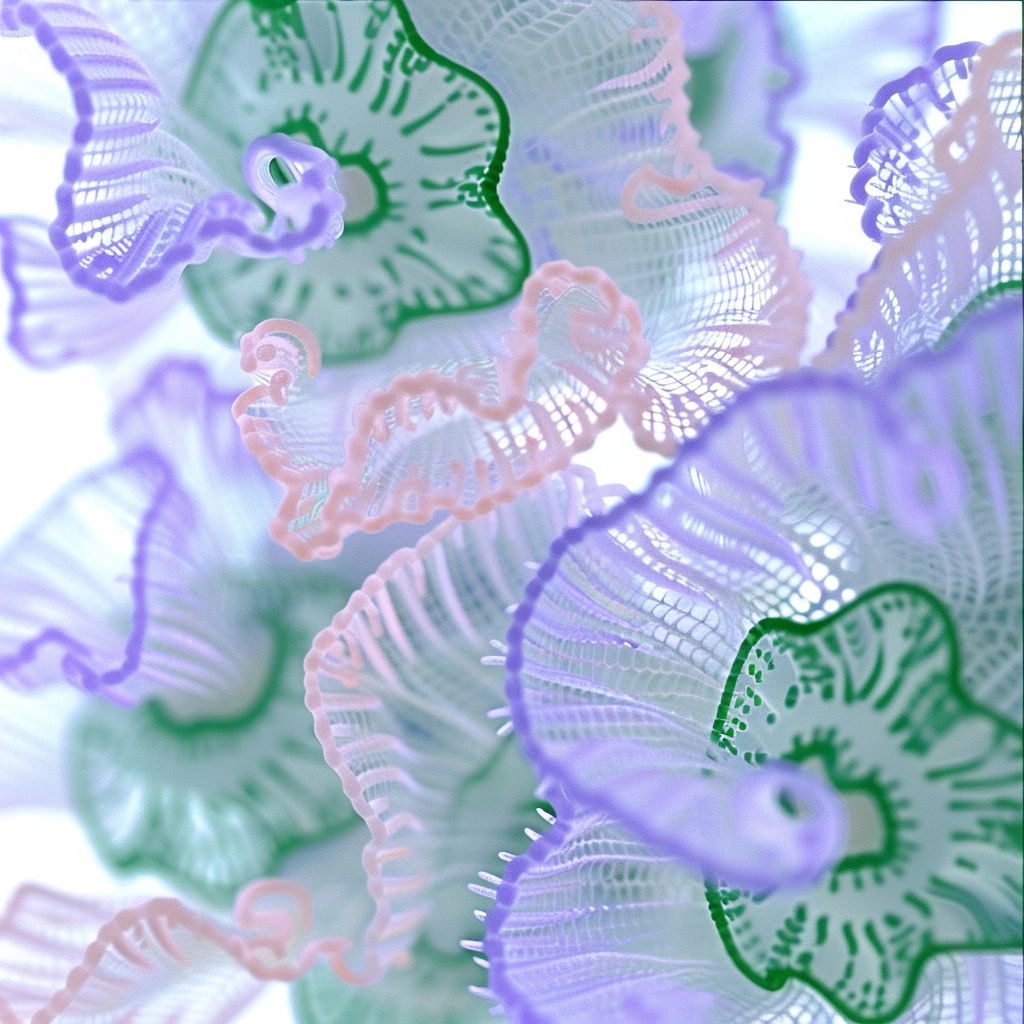

Generated pattern 2 2024

Generated pattern 2 2024

Concept Shot 1 2024

Concept Shot 1 2024

Concept Shot 2 2024

Concept Shot 2 2024

Concept Shot 3 2024

Concept Shot 3 2024

Concept Shot 4 2024

Concept Shot 4 2024

Original Object - Sea Urchin 2024

Original Object - Sea Urchin 2024

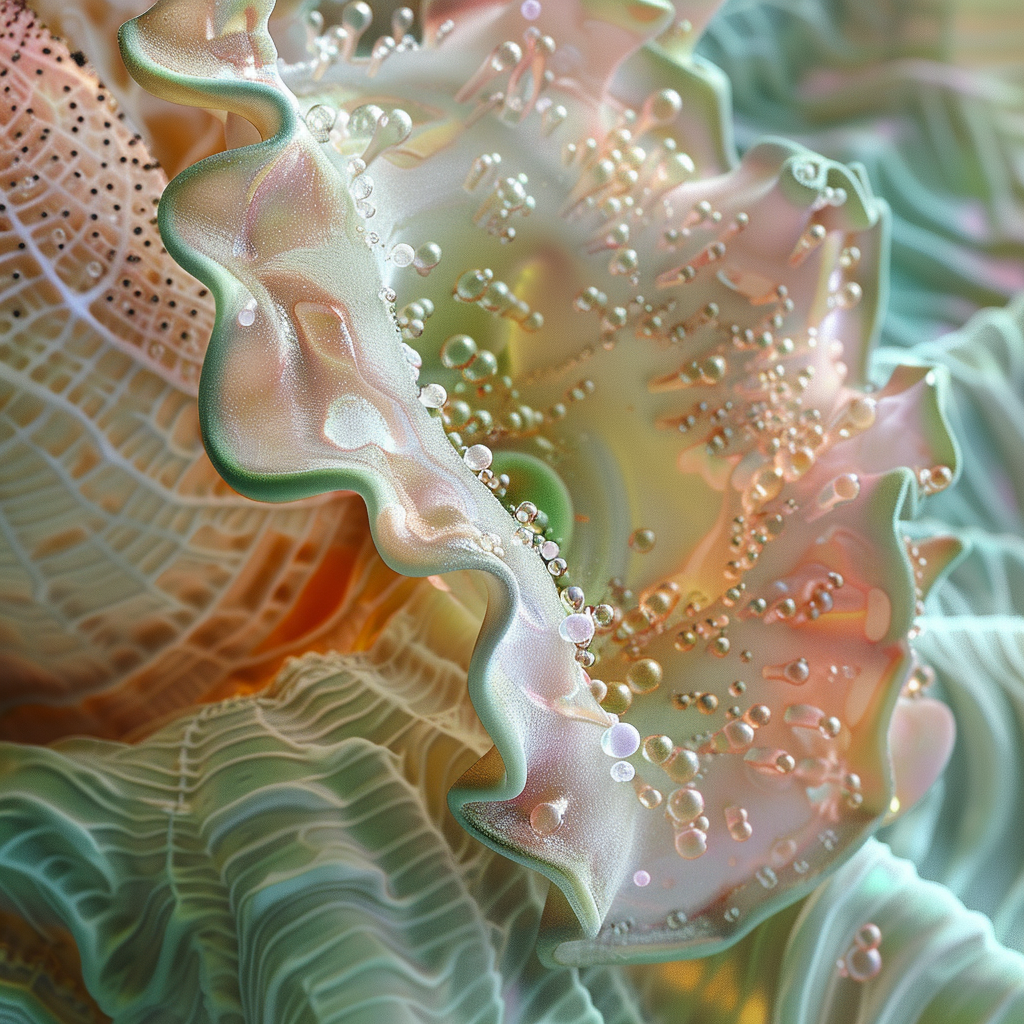

Generated Pattern 2024

Generated Pattern 2024

Generated Pattern 2024

Generated Pattern 2024

Concept Shot 1 2024

Concept Shot 1 2024

Concept Shot 2 2024

Concept Shot 2 2024

Concept Shot 3 2024

Concept Shot 3 2024

Concept Shot 4 2024

Concept Shot 4 2024

Learning:

+ the positives.

- The resulting generated patterns showed a remarkable ability to adapt to different scales and contexts, from intricate surface textures to bold, sweeping garment designs.

- The interplay between human creativity (in the initial drawings and curation) and AI capabilities produced outcomes that neither could achieve independently.

- the negatives.

- The patterns and style of the image aren't necessarily preserved exactly on the resulting image, suggesting further fine-tuning may be required for one to one matching.

This study demonstrates the potential of AI as a collaborative tool in the creative process, expanding the possibilities of pattern design while maintaining a connection to the artist's original vision or natural inspiration.

Untitled

Untitled

Untitled

Untitled

Untitled

Untitled

Untitled

Untitled

Street style:

Untitled

Untitled

Untitled

Untitled

Untitled

Untitled

Untitled

Untitled